Educated guesswork can only get you so far. If you really want understand whether changes you are making to your website are having the desired impact, you need to start A/B testing.

Happily, A/B testing is more accessible than it’s ever been with easy-to-use tools now readily available. However, it’s vital as a marketer you know the fundamentals before you run and invest in A/B testing. The last thing you want to do is waste resource and money.

Follow these 9 must-know tips and get the most out of your A/B testing.

1. Create a Test Hypothesis

Testing random ideas wastes precious time and traffic. The easiest way around this is to ensure you have a robust test hypothesis.

A hypothesis is a proposed statement made on the basis of limited evidence that can be proved or disproved. It is used as a starting point for further investigation.

Test hypotheses should come from proper conversion research, including quantitative and qualitative methods. The more research and data you gather, the better your hypothesis will be and the more likely you are to find significant improvements.

If you test A vs B without a clear hypothesis and B wins by 20%, what have you learned? Most likely nothing. And more importantly, what you learned about the audience is limited. A hypothesis helps us improve our customer theory and come up with even better tests.

A useful hypothesis formula for your A/B tests:

[1] Because we saw (data/feedback) [2] We expect that (change) will cause (impact) [3] We'll measure this using (data metric)

2. Have Enough Traffic & Conversions

To complete a statistically valid test within a reasonable timescale, you need to make sure you have enough traffic and conversions.

Avoid waiting for a test result that’ll take many months. This will waste precious time and resource, all of which could have been spent taking advantage of other opportunities.

So, how much traffic do you need? This depends on the test and the number of variations.

The more traffic and conversions you have the more confidence you can have in the data. At the very least, strive for 250 conversions per variation, but more often than not you'll need 500 or more.

I strongly advise using a sample size calculator before running any test. This will give you an indication of the sample size required for meaningful inferences.

There are a number of useful calculators available online. Evan Miller’s is a popular one or try Optimizley’s.

Don’t have enough traffic?

A/B testing might not be suitable. If you have a low volume of traffic and conversions per month then be confident in your conversion research.

Get feedback from customers, analyse user behaviour in Google Analytics or carry out user testing. Then go with the changes and keep an eye on your bottom line.

At the end of the day, you’re striving to improve your ‘north star’ metric so be bold in your changes. You don’t want to hinder progress waiting months start testing and wait even longer for it to become statistically valid.

3. Caution with WYSIWYG editors

Most testing tools feature a ‘what you see is what you get’ (WYSIWYG) editor. This interface allows you to edit a webpage easily for your test variation(s) without the need for coding knowledge.

However we advise caution. Not only do WYSIWYG editors limit your ability to make significant changes, they can also play havoc with your code.

Moving/deleting/rearranging elements may look fine however there’s a risk it may not render correctly on certain screen resolutions, devices, browsers etc...

For tests that require significant changes ensure you seek support from an experienced web developer so as not to break your website’s code.

4. QA your tests!

It’s vital to conduct quality assurance (QA) testing before deploying an A/B test. This will ensure your test variation(s) don’t contain any bugs and work correctly on all browsers and devices.

Failure to do so risks the validity of the test. For example, if your variation doesn’t display correctly on Internet Explorer then your test data will be flawed. Not to mention it’s a potentially costly experience for some of your users.

The bottom line is that you should QA your tests on all major devices and browsers. And here’s a great tool for doing so...

If you’re unsure what browsers and devices you should prioritise then look at your browser and device reports in Google Analytics.

5. Test for long enough

Reaching statistical significance is not a stopping point. As well as having enough traffic you’ll also want to ensure the test runs long enough to be confident that the result is sound.

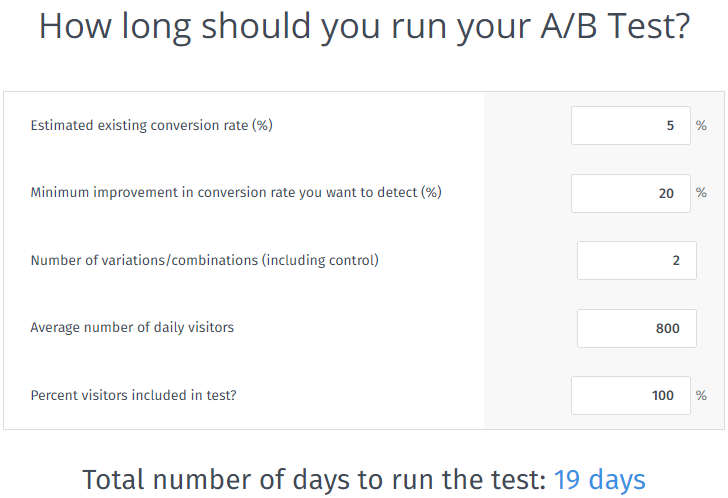

Calculators such as VWO’s give an indication of the number of days (at least) you should run a test for.

Let’s say you want to run an A/B test on one of your key landing pages. From Google Analytics you calculate i) the average number of daily visitors is approximately 800 and ii) the typical conversion rate for the landing page is 5%.

The minimal detectable effect you would like to see is 20%, meaning you’re baseline improvement is to see a conversion improvement from 5% to at least 6%. Using these figures in the VWO calculator recommends running the test for at least 19 days.

As a rule, my advice is to run a test for between 2 and 5 weeks. Any shorter and you run the risk of a getting a false positive, any longer you run the risk of sample pollution through cookie deletion.

What else?

- Avoid jumping to conclusions – Only stop a test early if business goals are threatened by a test variant performing significantly poorly

- Be aware of the novelty impact – Consider whether the increase in conversion rate is a result of returning visitors drawn to the change. Segment results for new vs returning.

- Test for a minimum 2 business cycles (for e-commerce businesses a business cycle may typically be a week)

- Test whole cycles – if you start a test on a Monday, end it on a Monday.

- It’s not moving around hugely at top or segment level, the error intervals aren’t overlapping much

6. Be Aware of External Factors

Throughout your testing you’ll need to be aware of threats that could skew your data. Here are some common examples:

Broken code – If the test variation doesn’t work correctly on a certain browser/device combination it will impact the validity of your test. Ensure you go through a QA process, keep an eye on your test after go-live, look at key segment performance.

Outliers – eCommerce businesses could be impacted by bulk orders/buyers. Depending on which test treatment they experience this will impact your results. Transactions, revenue, average revenue per visitor will be distorted. Be sure to analyse this closely and remove any outliers.

Season & Weather – If you run a test during the holiday season, know that the test outcome is only relevant to that season. If your business is highly seasonal or weather impacted then validate your test result in another time period.

Internal Campaigns – If you’ve just started a large PPC campaign, or sent out an email blast, chances are that traffic won’t behave as predictably as your usual traffic. The results of the campaigns can, therefore, influence your A/B test results. Be sure to communicate your testing program to your marketing team and colleagues.

Competitor Activity – Your traffic could change depending on whether your competitor launches a major campaign, or even goes bust. You may see a peak in interest or an increase in comparison shopping, be aware of these influences and the impact it could have on your tests.

7. Have a Basic Understanding of Statistics

Many conversion experts would say this is the top prerequisite for anyone wanting to A/B test effectively. I’ve already mentioned terms such as sample size (how much traffic you need), minimal detectable effect (the lift you are shooting for) and statistical significance (the likelihood the result is not caused by chance).

However, there are many other aspects involved in A/B testing. If terms like confidence intervals, the margin of error, p-value, statistical power mean little to you then I invite you to read this article. Statistics within A/B testing can be complex and is a whole subject on its own. Typically, if you follow these top-line rules you’ll avoid the most common mistakes:

- Test for two full business cycles

- Make sure your sample size is large enough (use a calculator before you start the test)

- Keep in mind confounding variables and external factors

- Avoid running multiple tests that overlap and skew your results

- Ensure the margin of error reported in your testing tool doesn’t overlap

- Aim for 95% confidence interval

- Analyse the trend in your results to make sure it’s consistent

8. Send Test Data to Analytics

Always integrate your A/B testing tool with Google Analytics. Testing tools typically report on top line numbers and have limited ability for segmenting and slicing up the data.

Remember, averages can lie. If version A beats version B by 10% that doesn’t give the full picture. There may be underlying segments performing differently and it’s important to uncover this insight.

Sending test data to Google Analytics allows you build advanced segments and custom reports. This is useful when trying to understand the impact of your test and what learnings can be made.

9. Prioritise, Plan and Document

You’ll no doubt have numerous test ideas so it’s important to have a plan and prioritise your tests accordingly. To help you do this consider frameworks such as PIE to score each test and order them based on potential, importance and ease.

Potential: How much improvement can be made on the page

Importance: How valuable is the traffic to this page? (how much would a 5%, 10% uplift make?

Ease: How complicated will the test be to implement on the page/template

Once you’ve planned and prioritised your tests be sure to communicate your testing program to the wider business. Get them engaged with your tests - notify when live, communicate results/learnings, get them to submit test ideas.

And, finally, keep a record of your tests and results. Document the test hypotheses, screenshots of the variations, the results. This is important go back to a later date and ensure you don’t waste time if you’ve already completed a valid test.